AI Video Wars Deep Dive | TED AI Show ft. Convai CEO | AI Quick Hits

Competition in AI video turns up to 11. AI-powered NPCs so real you won't believe they're not human. Meanwhile, Adobe drops an updated Terms of Use but questions still remain.

Hey Creative Technologists,

It's been a livelier week than usual, especially for video creation & privacy in the AI age — in this edition we’ll cover:

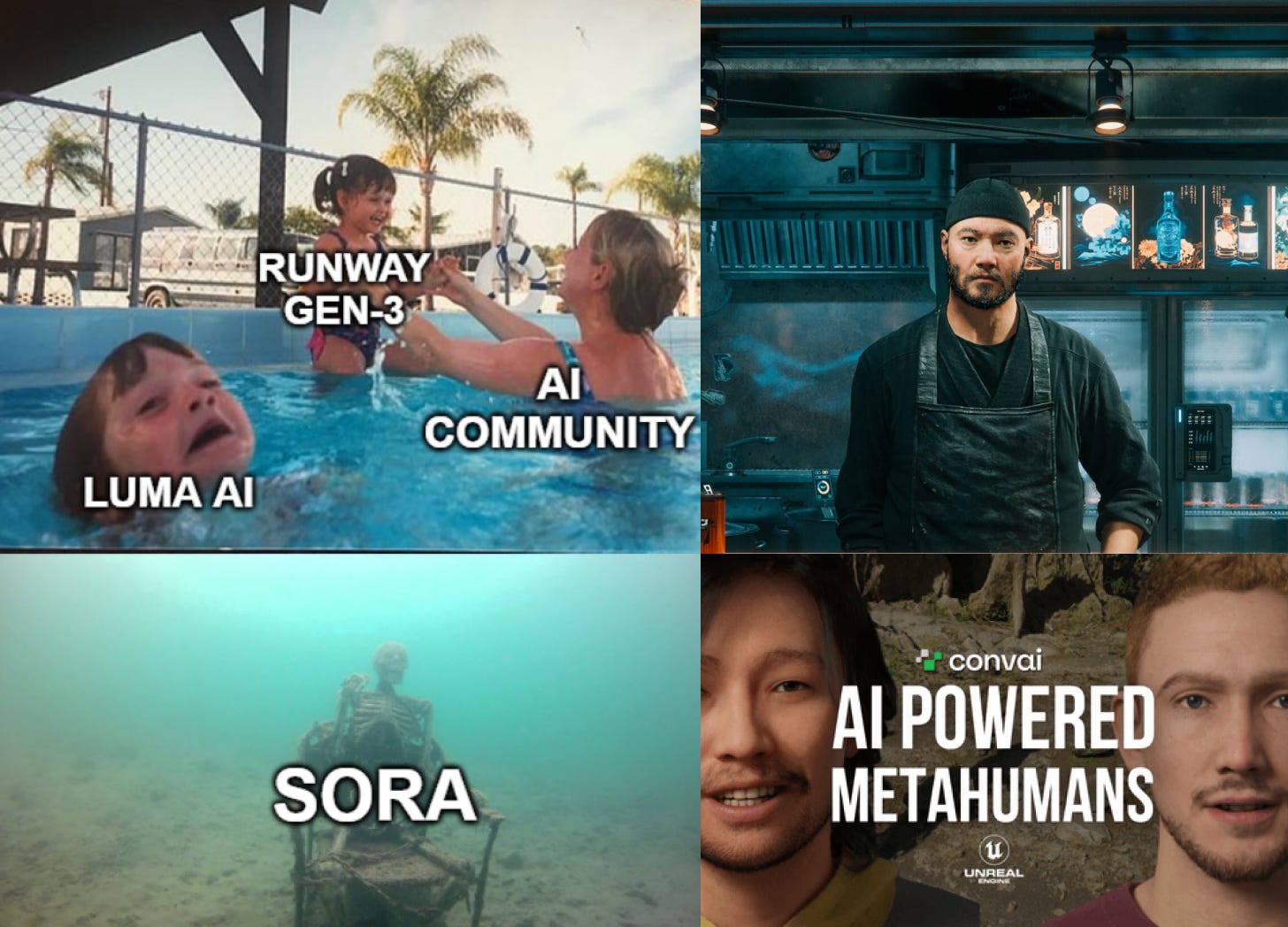

TED AI Show Ep 5: Your next best friend may be 100% AI w/ Convai CEO

Dive deep into AI video generation — Luma’s Dream Machine, Runway’s Gen-3, and where the heck are OpenAI & Google?

AI quick hits (Adobe / Microsoft’s response to widespread backlash for different yet deeply related reasons)

State of the art in AI-powered NPCs w/ Convai CEO Purnendu Mukherjee.

Remember that Ramen Shop NPC demo that took the internet by storm? I sat down with Convai's CEO, Purnendu Mukherjee, the genius behind the AI tech that Jensen Huang flexed on stage.

In this week’s episode of The TED AI Show, we get into:

AI NPCs so real, you'll forget they're not human

How AI NPCs change player engagement forming emotional bonds

Infusing multimodality into AI NPCs to bring us closer to JARVIS

Authoring immersive characters with rich backstories

Mimicking the full range of human emotions in AI characters

NPCs in education, marketing and real-world AR experiences

Is AI making the matrix a reality, and we opting into it?

Check it out on Episode 5: The TED AI Show — available wherever you get your podcasts (direct episode links to: Spotify, Apple, YouTube)

Deep Dive: Competition in AI video generation turns up to 11 🌶️

It was 11 days ago that I was ranting about Chinese users getting Sora-class video generation before the US. In case you missed last week’s AI quick hits, Kling is an AI video generation model created by the second largest short form video giant in China, Kuaishou Technology.

Rumors suggest the big tech behemoths of Google and OpenAI couldn’t risk launching such a tool with the US elections around the corner — or closing crucial data licensing deals. I had a chance to try Veo at I/O (though no access yet!) and it truly is a step up. Meanwhile, Runway and Pika Labs offer cool tools — but the generation quality was nowhere near these compute-rich and data-rich giants.

Enter Luma's 'dream machine' — a much needed step up in AI video tools.

No more ‘Ken Burns’ style clips with minimal movement - we’re talking real, dynamic action. It also signals a shift by Luma away from their focus on NeRFs/Gaussian Splatting (which was undoubtedly hard to monetize) towards AI video generation. The internet went wild, predominantly with memes — like this one by yours truly that caught fire on Twitter :)

Big jump in fidelity — but still slot machine AI

The increased movement is phenomenal, so is the temporal consistency, but I’m still left craving control. Like most image-to-video AI, its kinda like working with a chaotic golden retriever you’re trying to coax into doing cool stuff - or better yet - having your exact dream.

In other words, I think these tools are best suited to situations where you are open to surprises and co-developing the narrative vs. faithfully translating the vision in your script onto the screen. Luma is working on tools to exert more control, but it remains to be seen how far that will actually go.

Meanwhile, here's a selection of my test generations pulled together into a loose edit. Will experiment with a more stylized CG aesthetic next.

Tankers vs. speedboats: Google & Stability engineers defect to make magic happen in record time

The backstory of Luma (known primarily as a 3D computer vision startup) shipping a video model is fascinating — and perhaps emblematic of the difference between tanker ships and steam boats.

Though, my industry sources tell me the effort also involved ex-Stability engineers, and only a fraction of Luma’s employee’s are currently focused on video — which in my eyes makes this achievement even more amazing. They’ve crossed 1M creators in 4 days - so I suspect that will change :)

OpenAI and Google focusing on a bigger prize or letting primitives atrophy on the shelf?

No matter how you slice it, this is a cool story:

Google engineer working on AI video models realizes they’d be too slow to ship products with Veo and Sora.

Jumps ship, moves to a startup, trains a new video model, and ships it to consumers in 3 months 😮💨

Internet goes wild with dream machine creations, need moar H100s, send bids ASAP!

Meanwhile, Google has essentially dropped a Donald Glover collab video at I/O, and OpenAI doesn’t even have a waitlist for Sora… But hey Ashton Kutcher says he’s bullish on the potential in a panel with Eric Schmidt.

Pretty wild, and emblematic of the difference between even tanker ships like Google and even OpenAI vs. speed boats like Luma.

Here’s hoping the AI tanker ships actually release Sora and Veo level tech into the wild vs teasing collabs with A-list celebs.

Otherwise the visual AI creation world is passing them by while they sit on remarkable tech that is rapidly depreciating in value.

Who knows? Maybe they’re embroiled in the bigger battle for the future of search and assistant… but gosh AI video feels more like the battle to be the new YouTube and Netflix.

Runway responds by announcing 'Gen-3 Alpha’

While we wait waiting for the Sora and Veo trains to arrive, Runway has announced Gen-3 for AI video generation. Luma’s Dream Machine suddenly has competition.

Initial results from Gen-3 stack up favorably vs Dream Machine. Though alas Runway it’s just an announcement - with a ‘coming soon’ launch date. I gotta say, we’re all tired of the Sora approach to ad hoc dropping videos - ship fast!

No doubt something they’ve worked on for a while, but seemed a bit rushed. Perhaps needed given how quick AI users are to move from one subscription to another — there is no loyalty — the best product wins because the cost to change services is near zero.

The official word is we’ll see something in the coming days — which is PR speak for a release on the order of weeks vs. months.

Let’s see if OpenAI and Google decide to accelerate the launch of Sora and Veo. Also eager to see what Pika Labs does in this space fresh off another round of fundraising. Fun time to be in AI creation!

AI Quick Hits

Hedra drops Character-1

New foundational video model focused on humans — these are expressive generations brimming with emotion. Oh and they can do beards too :)

Adobe Updates Their Terms of Use — generative AI + added clarifications

After a widespread backlash from creatives over the past few weeks, Adobe has rolled out a new ToU that explicitly carves out GenAI training. A win for creators, but questions still persist. Here's a quick rundown:

Microsoft recalls recall before launches — will take time to get it right

The real question is how Recall even got so far with such a limited security review, that it required 2 backtracks. Truly baffling. Meanwhile, Apple’s marketing SVP had quite an answer to share re: MSFT misteps:

OpenAI board brings on ex-NSA and CyCom director

Ret. Gen. Nakasone is a smart hire since OpenAI needs to protect itself from sophisticated bad actors incld. nation-states. This AI wave also has clear implications for the IC. Snowden-era revelations told us that NSA can "collect it all" - but now it can "know it all".

That’s it for this edition. As always, hit me up with with thoughts, and I’ll see y’all in the next one!

Cheers,