Apple WWDC AI Deep Dive | TED AI Show w/ Dr. Patrick Lin | AI Quick Hits

From an AI-infused OS to Hollywood-grade 3D cameras, Apple's WWDC unveils a new era. Is it a game-changer or a privacy nightmare? Meanwhile, NVIDIA and China make their moves, and Adobe plays defense.

Hey Creative Technologists,

In this edition we’ll dive deep into Apple’s WWDC announcements:

Apple WWDC: infusing AI everywhere with a split-compute architecture — a game change or cause for concern? (ft. Elon Musk)

TED AI Show Ep 4: Why we can’t fix bias with more AI w/ Patrick Lin, P.h.D

AI quick hits (NVIDIA's Earth 2, Microsoft’s Recall Fumble, Adobe’s Terms of Service Outcry, and China’s Sora-Tier AI video)

Deep Dive: Is Apple’s AI a Game-Changer or a Privacy Risk?

In what was probably the most pivotal WWDC of the past decade, we are getting the first glimpse into what Cupertino has planned for pervasive AI across iOS, iPad and MacOS. Or as they call it “AI for the rest of us”.

First off, Apple’s ChatGPT integration isn't as deep as I expected. Apple trained their own language and image models for most of what they showcased during the keynote — opting for a plurality of fine-tuned, task-specific models. Clearly Cupertino has their house in order way more than the press and rumors suggested.

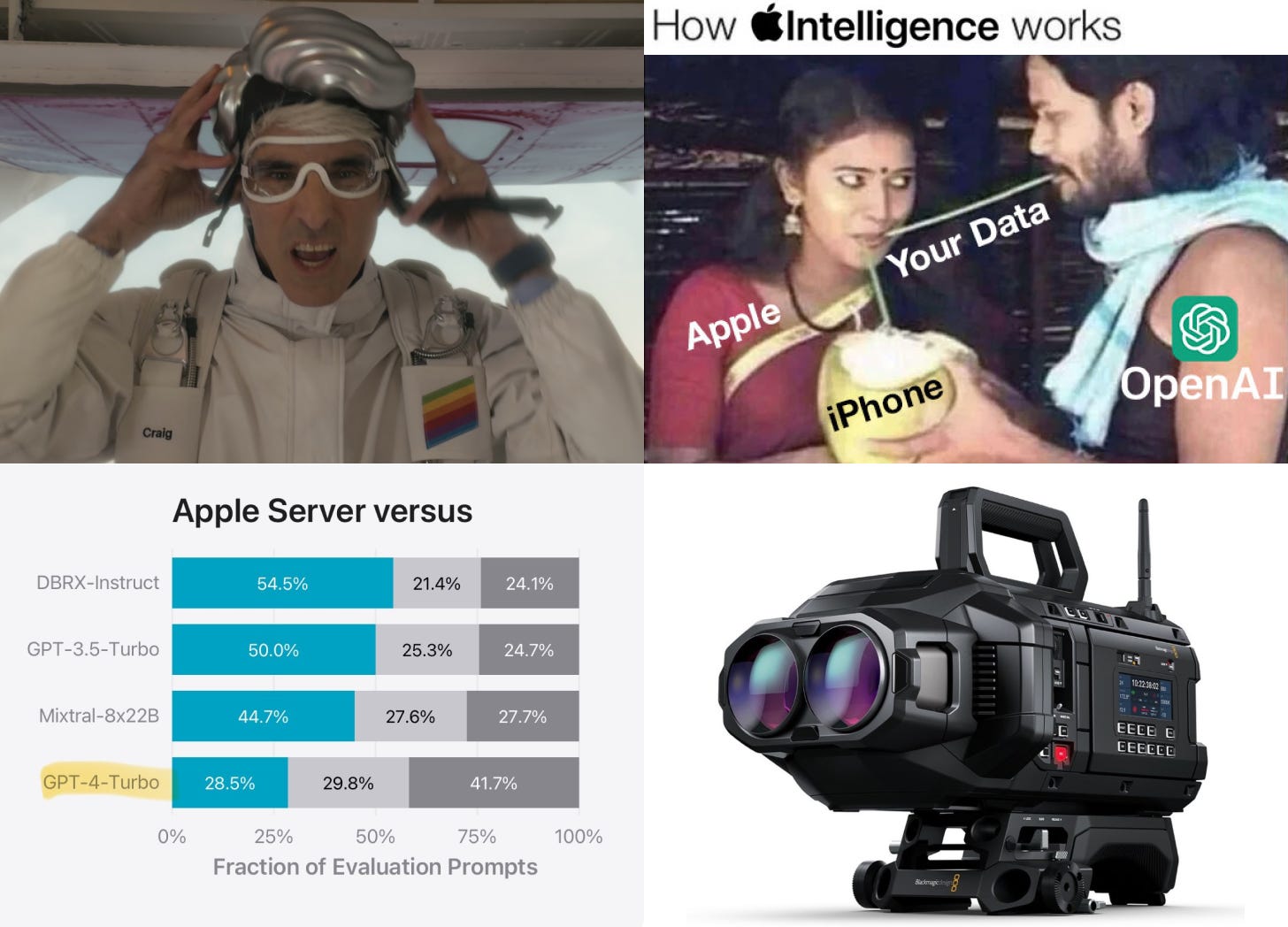

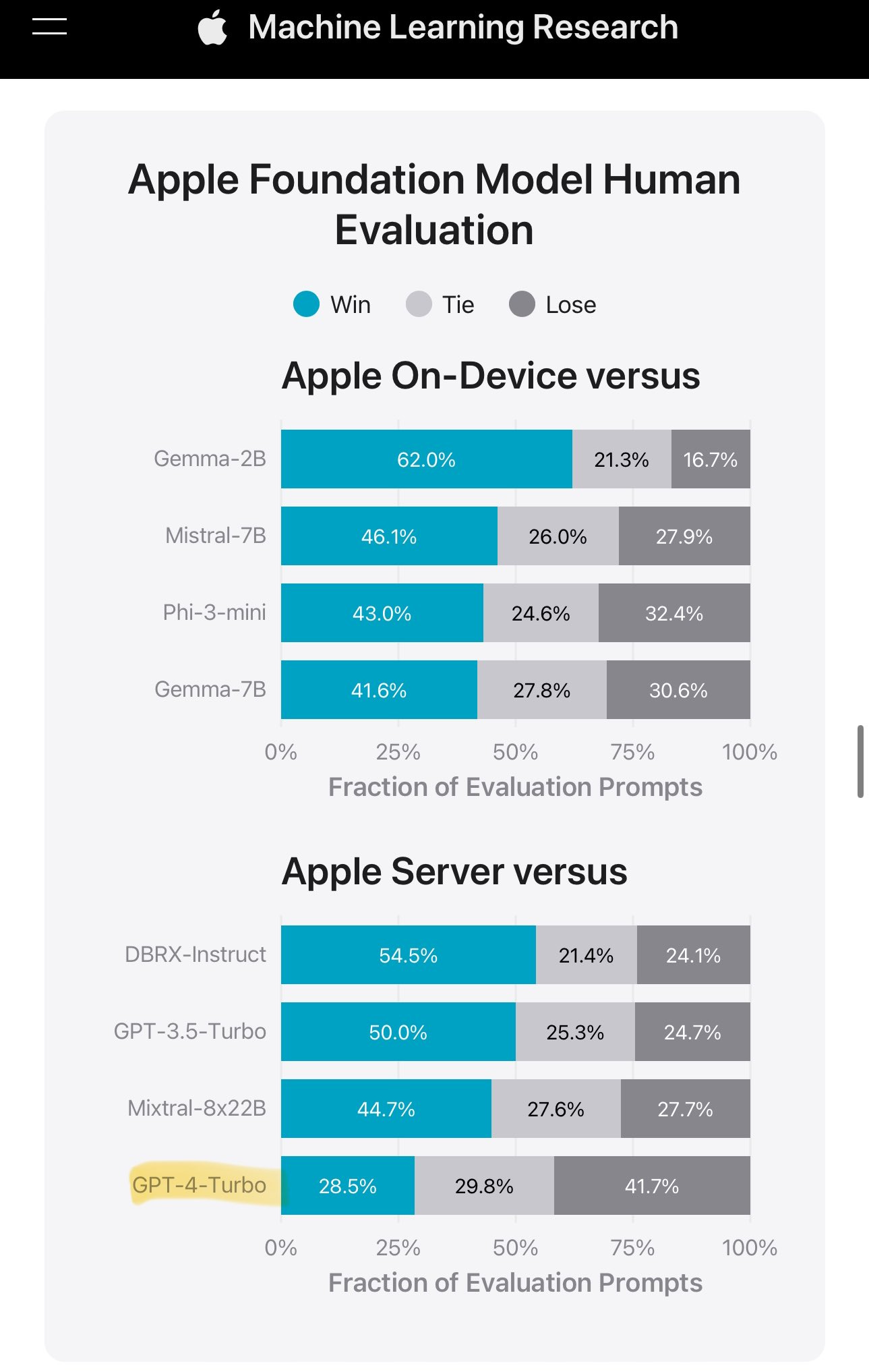

So why even bother partnering with OpenAI? Well, this one chart by Apple shows why they partnered with OpenAI, despite rolling their own foundational models:

Apple’s own server models weren’t anywhere close to GPT-4 Turbo performance in human evals. But Apple isn’t limiting themselves to OpenAI — it has now been confirmed that Google Gemini will be an option in the future.

But how exactly do all these models work together?

The TL;DR is that Apple's approach has 3 tiers:

1. Handle as much on-device with their own fine-tuned models: tasks like summarization, rewriting etc.

2. If it's a more complex query, use Apple's larger models in their private cloud

3. If it's still more complex, send it over to ChatGPT with explicit consent from the user

The genius bit is building a ‘semantic index’ of all your private data to automagically provide context to AI models. The end result is never really needing to ‘prompt’ and copy paste random bits, while also giving you next a truly next-gen search experience (e.g. pull up that podcast my friend sent me last week).

Now depending on how you look at it, this is either terrifying or beautifully brilliant. So I’ll cover both sides and let you decide:

The bear case: Apple too has fallen (ft. Elon Musk)

The fact is Apple’s reality distortion field is strong. It’s kinda wild that with “semantic index,” Apple is basically doing what Microsoft wants to do with AI recall + Copilot, and without most of the big brother backlash.

Semantic index means all your private content (messages, emails, photos, videos, calendar events, screen context, etc.) is processed and can be queried by AI models. It’s basically Apple’s version of Microsoft’s AI recall — and a far more elegant implementation at that.

Get the new iOS or Mac OS and you’re opted into these AI features by default. But it comes with a great Apple privacy narrative. Meanwhile, Microsoft fumbled the ball talking about intermittent screenshots and “photographic memory” lol.

This semantic index is super useful to make AI contextual — both with Apple’s on-device models and when you intent into 3P models like ChatGPT (and in the future Claude, Gemini, or whatever).

Super useful to be honest, but some obvious questions:

Is it possible to opt-out of any of these AI features in iOS 18 and not have a semantic index created of all your private content?

How much of this semantic index is generated locally vs. in the cloud? Like is your phone running overnight to generate embeddings? I’d guess this is where Apple’s brand of cloud ‘confidential computing’ ft. Apple silicon comes in.

How much of this semantic index do 3P providers get access to when you send a query? Can you get visibility into exactly what’s being shared? OpenAI says “requests are not stored by OpenAI, and users’ IP addresses are obscured.”

Is user data used to improve Apple’s AI models? Will you have your own ‘personalized model’ that keeps getting better, or will techniques like federated learning be used to improve the ‘global model’ for everyone?

Now, would I go as far as Elon Musk to ditch Apple devices all together? Probably not. And to understand why, we need to look at Apple’s new cloud architecture built around their own silicon. I really don’t think the stock market has priced in the significance of this expensive investment.

The bull case: Apple has set the new standard for privacy in the cloud, while bringing “AI for the rest of us”

So, I initially thought that with ‘Private Cloud Computing’ Apple was doing their usual thing, and rebranded an existing technique i.e. ‘Confidential Computing’. But I was wrong…

Turns out Apple has taken ‘Confidential Computing’ to the next level. It’s SO secure that they can’t even comply with law enforcement requests.

No data retention (unlike every other cloud provider)

No privileged access (even Apple SREs can’t see your data even if they wanted to)

Custom hardware and OS (with Secure Enclave and Secure Boot to reduce attack surface)

Non targetability (masks user requests so an attacker can’t route them to a compromised server)

Verifiable Transparency (allows researchers to inspect software images to check assurances + find issues)

Seems like Apple has been working on this well before the GenAI project, presumably for cloud processing of sensitive data off AR glasses.

So barring getting hardware access - in which case RIP no matter if it’s the cloud or your iPhone, Apple has set the new standard for privacy in the cloud.

Immersive Capture Gets A Boost For Vision Pro

Among other things, spatial capture got a boost at WWDC. 2D photos in your library can be automagically converted in ‘spatial photos’ with that gorgeous parallax and depth. It’s safe to assume video conversion is right around the corner. I feel for the plurality of 2D-to-3D conversion apps that will quickly wane in relevance. But hey, this isn’t the first time Apple sherlocked their developers.

Meanwhile, on the professional end, we finally know what’s been hiding in those enclosures captured on the sets of Apple TV productions last year. Turns out it’s a gorgeous BM camera that’s capable of shooting at 8K 90 fps PER EYE (!). That is more that enough resolution to saturate the heck out of the Apple Vision Pro displays.

Prices TBD, but I’d safely assume it’s gonna be $20-40K range. Out of reach for most, so on the cheaper end, Canon has announced a new periscopic lens in the couple thousand dollar range, coming later this year.

Why we can’t fix bias with more AI w/ Patrick Lin, Ph.D.

This week I sat down with renowned tech ethicist Dr. Patrick Lin to unravel the thorny issue of bias in AI. Patrick is a fascinating cat — affiliated with the likes of Stanford Law School and U.S. Space Council, his research spans the ethics of frontier development (outer space and the Arctic), robotics (military systems and autonomous driving), artificial intelligence, cyberwarfare, and nanotechnology.

We dug into the hidden — and not so hidden — biases in AI. From historically inaccurate images to life-and-death decisions in hospitals, human biases reveal how AI mirrors our own flaws… But can we fix bias? Lin argues that technology alone won't suffice.

Check it out on Episode 4: The TED AI Show — available wherever you get your podcasts (e.g. Spotify, Apple, YouTube)

AI Quick Hits

China Gets Sora-tier AI video before the US

Trillion dollar cluster talk is cool and all, but why does China get Sora-tier video generation before we did? Please remedy the asymmetry!

NVIDIA’s Earth2 Goes To Street Level — digital twin for climate science

Earth 2 is easily one of my favorite projects to help countries & corporations to predict and respond to extreme weather. And now it goes to Street Level.

Tough few weeks for Adobe — their new terms are causing a massive uproar among creatives

Adobe’s New Terms Spark Outrage: Is Your Creative Work at Risk?

·It’s been a tough week for Adobe. Their new terms are causing a massive uproar among creatives — and they’re letting Adobe know on social media. 1. ToS seems to give Adobe free rein including human review of your files if you upload to their cloud, thanks to a very broad and permissive sublicense.

That’s it for this edition. As always, hit me up with with thoughts, and I’ll see y’all in the next one!

Cheers,