Beyond the Blue Dot: The Rise of Visual Positioning Systems (VPS)

Decoding VPS: The foundational tech for the next generation of AR and robotics to navigate the world.

GPS is 50 years old. While it’s the reason you can find a latte in a new city, it was never designed for the precision required by the next generation of technology. If you’ve ever seen your blue dot drift aimlessly while walking between skyscrapers, you’ve experienced the limits of satellite-based positioning.

As someone who spent six years building this tech at Google, I’ve seen firsthand how we’re moving from satellites in the sky to pixels on the ground.

Check out my latest video for a deep dive into the necessity of VPS and a look at the tools shaping this future:

VPS: The Precision Layer for the 3D World

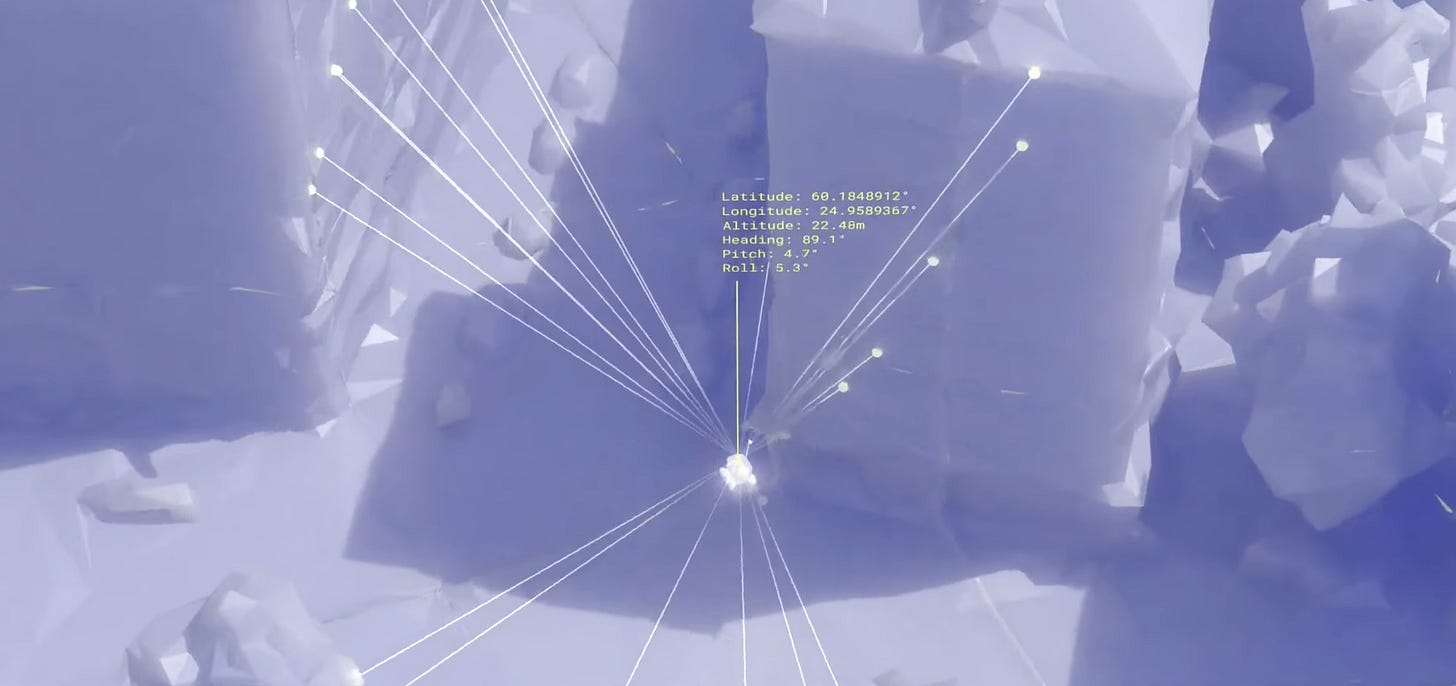

The solution to GPS’s coarse accuracy is VPS (Visual Positioning System). Instead of relying on timestamps from satellites 12,000 miles away, VPS uses the camera on your device to look at the world and match it against a pre-existing 3D model.

Sub-meter Positioning: While GPS is accurate to a few meters, VPS enables centimeter-level accuracy.

Rotational Accuracy: VPS eliminates the “which way am I facing?” problem, providing precise orientation that’s critical for AR and robotics.

The Google & Apple Advantage: By leveraging over a decade of Street View data, Google can provide high-fidelity localization across 100+ countries, and Apple can use imagery from its “look around” feature in 80+ cities.

Why This Matters: Robots, Drones, and AR

We are moving into an era of autonomous agents and spatial computing where “close enough” isn’t gonna cut it.

Robotics: A delivery robot needs to know the difference between the street and the sidewalk. VPS provides the map-to-world alignment to make that possible.

Contested Environments: In areas where GPS signals are jammed or denied, drones can use VPS and a camera to navigate perfectly.

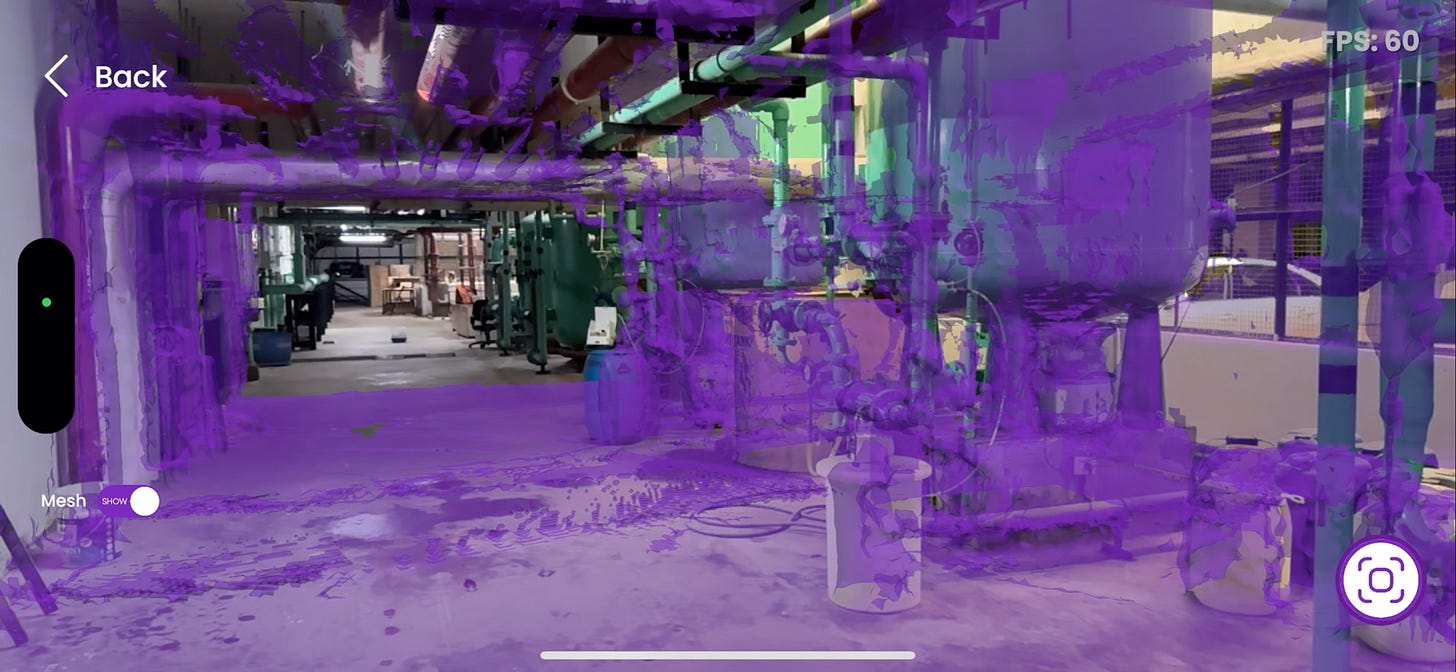

Persistent AR: Imagine leaving a digital sticky note on a specific piece of equipment for a colleague. VPS allows 3D annotations to stay locked to the physical world, even when you walk away.

The Emerging Ecosystem

It’s not just the tech giants. A new wave of startups like Multiset.AI is making it possible for anyone to scan a street with an iPhone and create a localized 3D model in real-time. We’re even seeing high-end capture devices like XGrids’ PortalCam using Gaussian Splatting to turn LiDAR and images into photorealistic, navigable scenes.

The Big Picture: Spatial Intelligence 🔭

VPS is the “where,” but the next frontier is the “what.” The real magic happens when we combine visual positioning with AI that understands the semantic meaning of the environment—a field known as Spatial Intelligence. We are moving toward world models that don’t just map space but understand it, opening the door for robots to step inside.

If you found this useful, share it with fellow reality mappers. The future’s too interesting to navigate alone.

Cheers, Bilawal Sidhu

bilawal.ai

The evolution from GPS to VPS represents exactly the kind of precision leap that's essential for spatial intelligence systems. Your point about centimeter-level accuracy being transformative for robotics resonates with the broader shift we're seeing - where environmental understanding isn't just about coordinates but semantic comprehension of space. The parallel between VPS providing spatial grounding and the way consciousness needs perceptual anchoring (as discussed in some recent AI consciousness research) is intriguing. Both require moving beyond coarse approximations to precise, context-aware positioning in their respective domains. The democratization of 3D mapping through tools like Multiset.AI could accelerate this in ways similar to how open datasets transformed machine learning.

The shift from satellite-based to vision-based localization is such a critical unlock for autonomous systems at street level. GPS's meter-level accuracy was fine for turn-by-turn navigation but completely breaks down when a delivery robot needs to distinguish sidewalk from roadway or dock at a specific apartment entrance. The centimeter-level precision from VPS basically transforms what's operationally feasible for last-mile robotics. What I find really compelling is how this pairs with the semantic understanding layer you mentioned, once you combine precise localization with scene comprehension, robots can handle edge cases that would've been imposible with GPS alone.