GPT-4 & Multi-Modal Magic - Why The Heck Does Vision Matter? 👀 Plus AI Twitter Space Hangouts 🎙

Learn Why GPT-4's Ability to Understand Images Transforms How We Interact with Artificial Intelligence. Plus AI hangouts on Visual ChatGPT, Wonder Studio and more!

GPT-4 is multi-modal. But why does that matter? 🧵

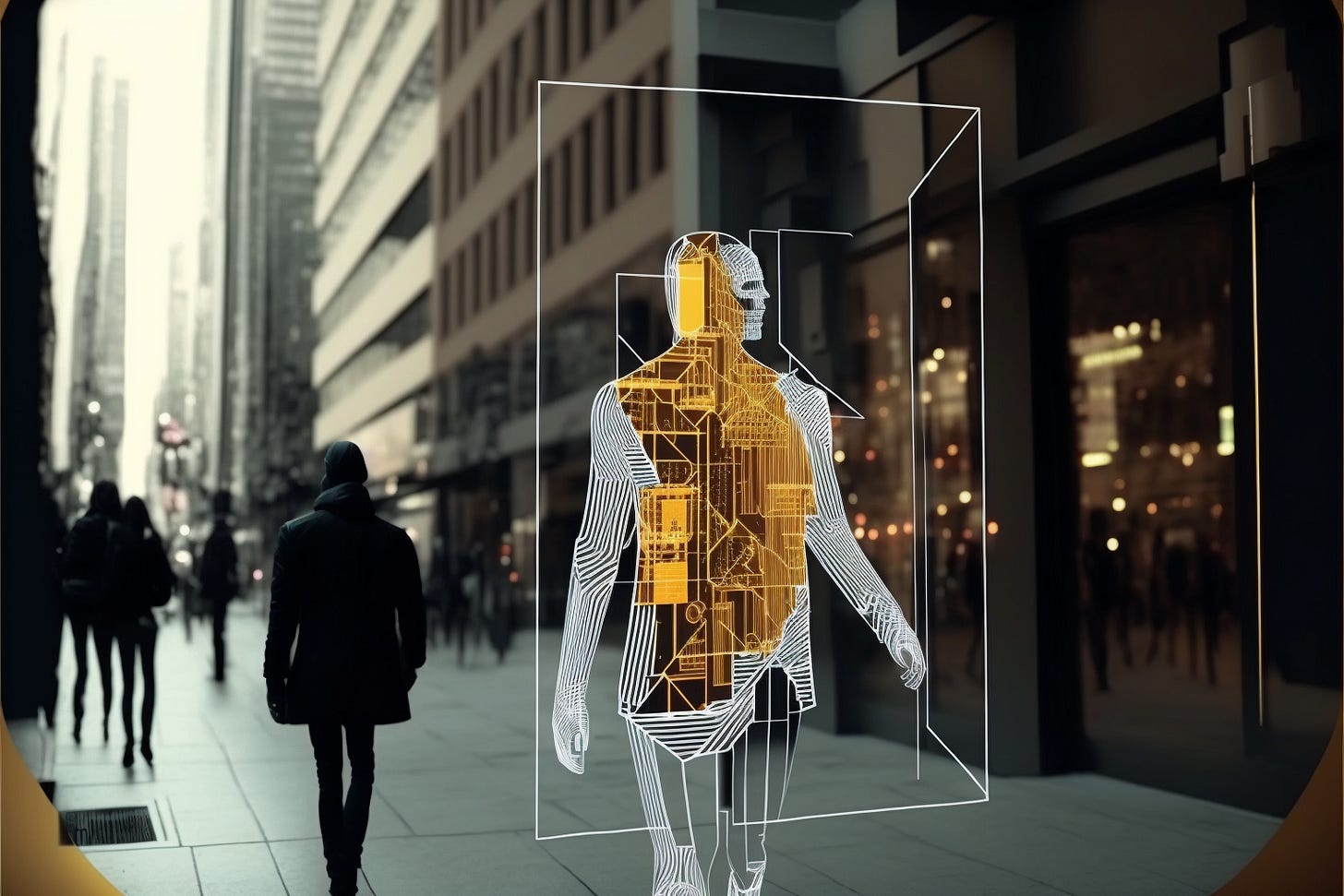

🖼 Simply put: a picture is worth a thousand words. GPT-4 bridges the communication gap with AI by embracing visual imagery to better express our intent.

TL;DR What ControlNet did for image generation, GPT-4 will do for LLMs. Let's explore why.

Limitations of Language:

Conveying intent purely in text form requires an exacting command of the language, but still leaves too much to interpretation.

This was a big problem with text-to-image models. Good for brainstorming. Not so good if you have a specific vision in mind.

Augmenting Language with Vision:

GPT-4 can "perceive" the contents of imagery, leaving less to interpretation. You can augment your text query by providing photos, diagrams or even screenshots to better express your intent. You could create a slide, diagram or UX flow and GPT-4 will understand the context.

Pivot Party Problems:

This is also wild because GPT-4 getting vision + language capabilities in the *same* foundational model just made a bunch of startups irrelevant overnight. For instance, wireframe-to-website would’ve been a whole ass startup a day ago. Now it’s just one example in an AI tech demo 😅

I feel for the founders having some interesting investor calls, and yet images are just the beginning. Obvious next steps for multi-modal:

- Video: to understand the spatial & temporal context embedded in a sequence of images (eye contact, expressions, interactions etc)

- Audio: understand tonalities & emotional context embedded in speech & music

- And of course my favorite: full blown 3D perception and dynamic scene graphs!

Closing Out + AI Twitter Spaces That Should Really Be a Podcast 🎙

That’s all for this update. I’ve been busy with SXSW meeting so many amazing creators and technologists and yet (!) the barrage of amazing startup news is relentless. In the past week we’ve got Google entering the fold, GPT-4, MidJourney v5, WonderStudio and so (!) much more.

For now — here are some deeper dives and great friday (friai?) conversations. These aresome awesome Twitter spaces where the AI art community on Twitter gathers to talk shop, and are really just podcast hangouts 🍻

VisualChatGPT, Wonder Studio and Multi-Modal Magic: https://twitter.com/i/spaces/1rmxPkPzOzXJN?s=20

ControlNet hype, node-based AI creation tools, physical-to-digital products, and web3 generative metaverses: https://twitter.com/i/spaces/1YpKkgnrbgNKj?s=20

If you want to join these live — they’re every Friday at 3pm EST, so you should hop on to the next one!

1. RT this thread or share this article with your creative tech frenz

2. Follow me on Twitter or your platform of choice for more dank content

3. And if you aren’t already, subscribe below to get these right to your inbox