Multi-ControlNet & Open Source AI Video Generation

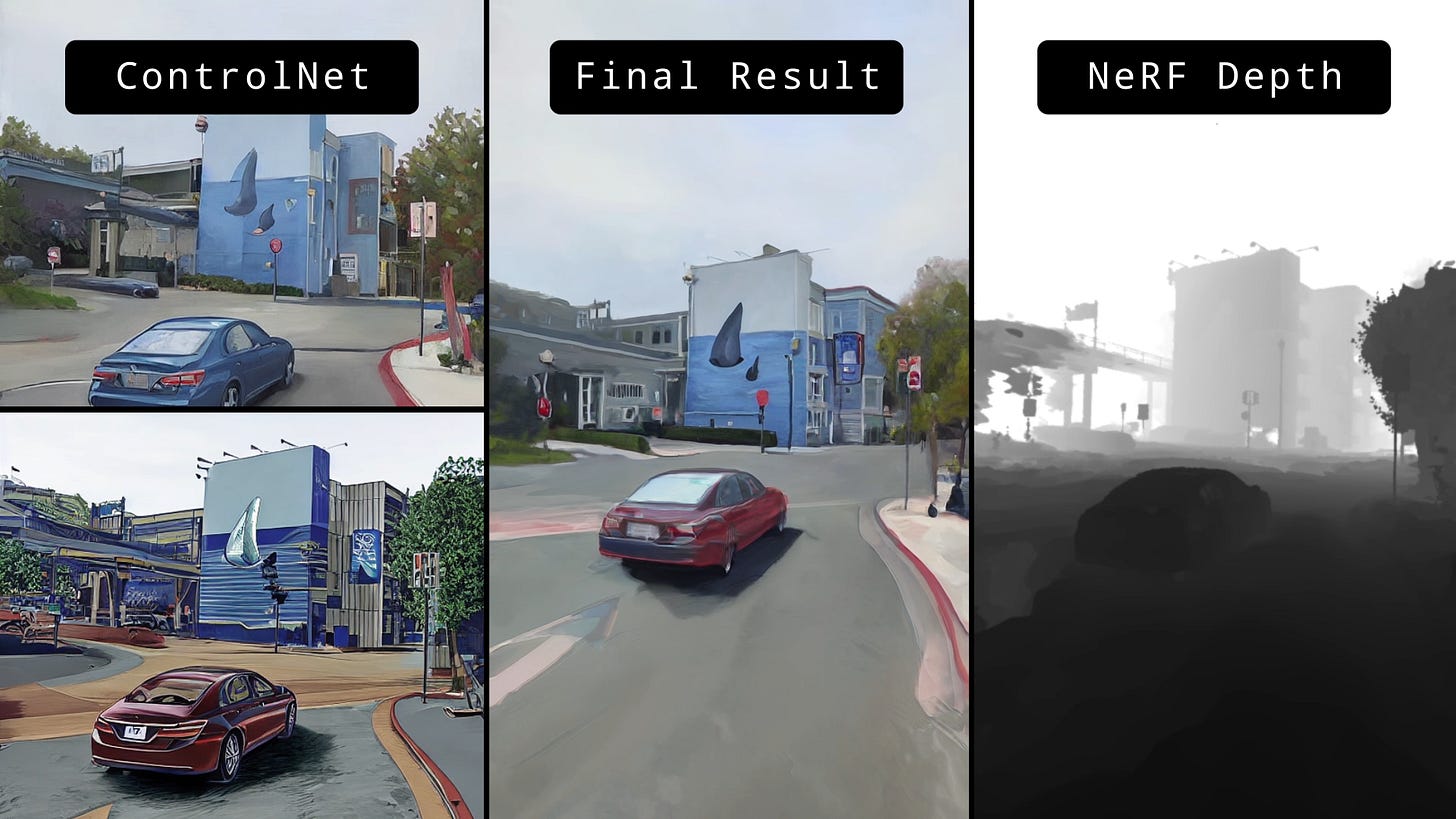

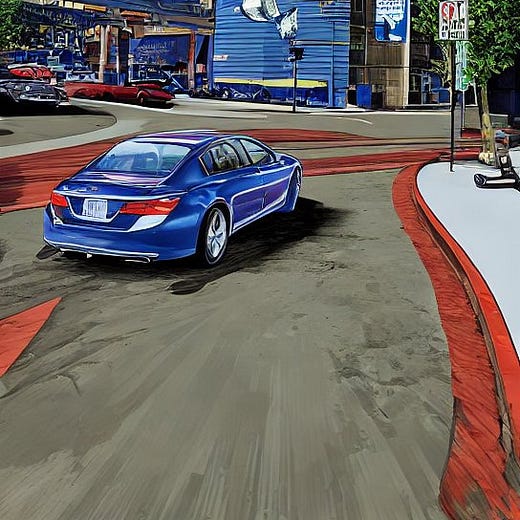

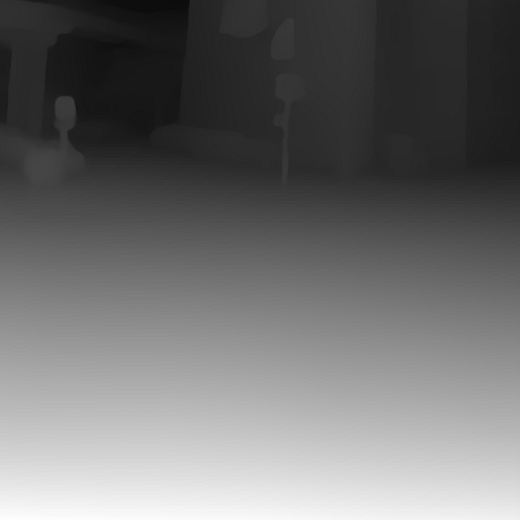

Full breakdown + takeaways from using ControlNet to make a video2video workflow. I use NeRFs here, but it'll apply to any 3D rendered or live action input. Let's get into it!

ControlNet continues to capture the imagination of the generative AI community — myself included! This thread is a continuation of my deep dive into ControlNet and it's implications for creators and entrepreneurs.

ICYMI, here’s the last post using ControlNet to redecorate a 3D scan of a room. I plan to make more videos + posts like the below, so stay tuned!

🪄 Honestly though, this wave of generative AI makes me feel like I'm 11 again discovering VFX & 3D animation software that gave me powers of digital sorcery to blend reality & imagination. It was fun to do this interview on my journey as a YouTube creator & product manager where I go deeper 🙏🏾

No doubt generative AI will brings out the child in all of us. It’s like we haven’t put together all the primitives at our disposal in all possible combinations and we’re learning new things every day.

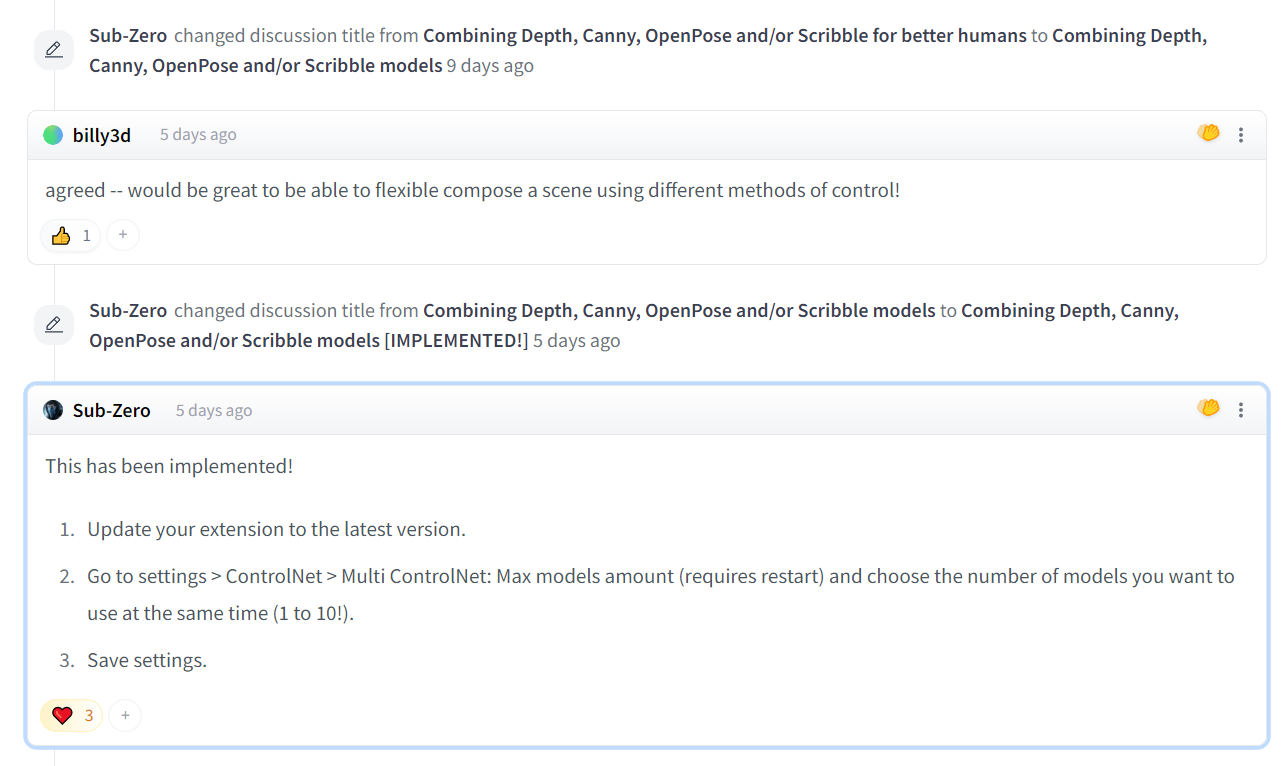

Plus, new primitives keep dropping. A few of you realize we multi-control net, make a feature request, and bam it’s implemented in a few days. And we didn’t even have to write a PRD or sit through reviews 😉

Now on to the workflow to make 🔥 videos with the latest in open source AI!

And that's a wrap! I’d love to keep sharing what I'm learning with the open source AI & creator community, so if you found this helpful I'd appreciate it if you:

1. RT this thread or share this article with your creative tech frenz

2. Follow me on Twitter for more dank content

3. And if you aren’t already, subscribe below to get these right to your inbox

thanks for the interesting workflow

Very comprehensive-- even a non-techie like me can get a sense of the glorious possibilities that this has opened.