Playable Reality Is Here. Stepping Inside Genie - Google's World Model

A hands-on look at the public launch of Project Genie and the dawn of playable reality. Turn text & images into interactive simulations.

Today is the day. While we’ve been buzzing about the potential of the Genie 3 model, Google DeepMind has officially opened the doors to Project Genie, bringing what I can only describe as “playable reality” to the public.

We have officially moved past simply watching videos; we are now stepping inside them. With today’s release, the boundary between static media and interactive simulation is vanishing.

Watch the full video here:

The Move from Watching to Walking

For the past year, we’ve been tracking the rapid evolution of video generators. But Genie 3 changes the substrate entirely. Instead of a linear clip, you provide a text prompt or an image, and the model—powered by Nano Banana Pro—spawns an interactive, 3D simulation that you can navigate in real-time.

During my early hands-on testing, I explored everything from infinite backrooms to underwater shipwrecks with impressive caustic effects and dynamic lighting. One mind-blowing moment, however, was stepping inside a vintage photo. The model inferred an infinite 3D environment from it, allowing me to walk the streets of a retro San Francisco and explore corners that weren’t visible in the original frame. I can’t help but imagine the incredible opportunities this will open up for educators to step inside a moment in time, or for an artist to bring a piece of theirs to life.

Emergent Intelligence: The GPS Moment

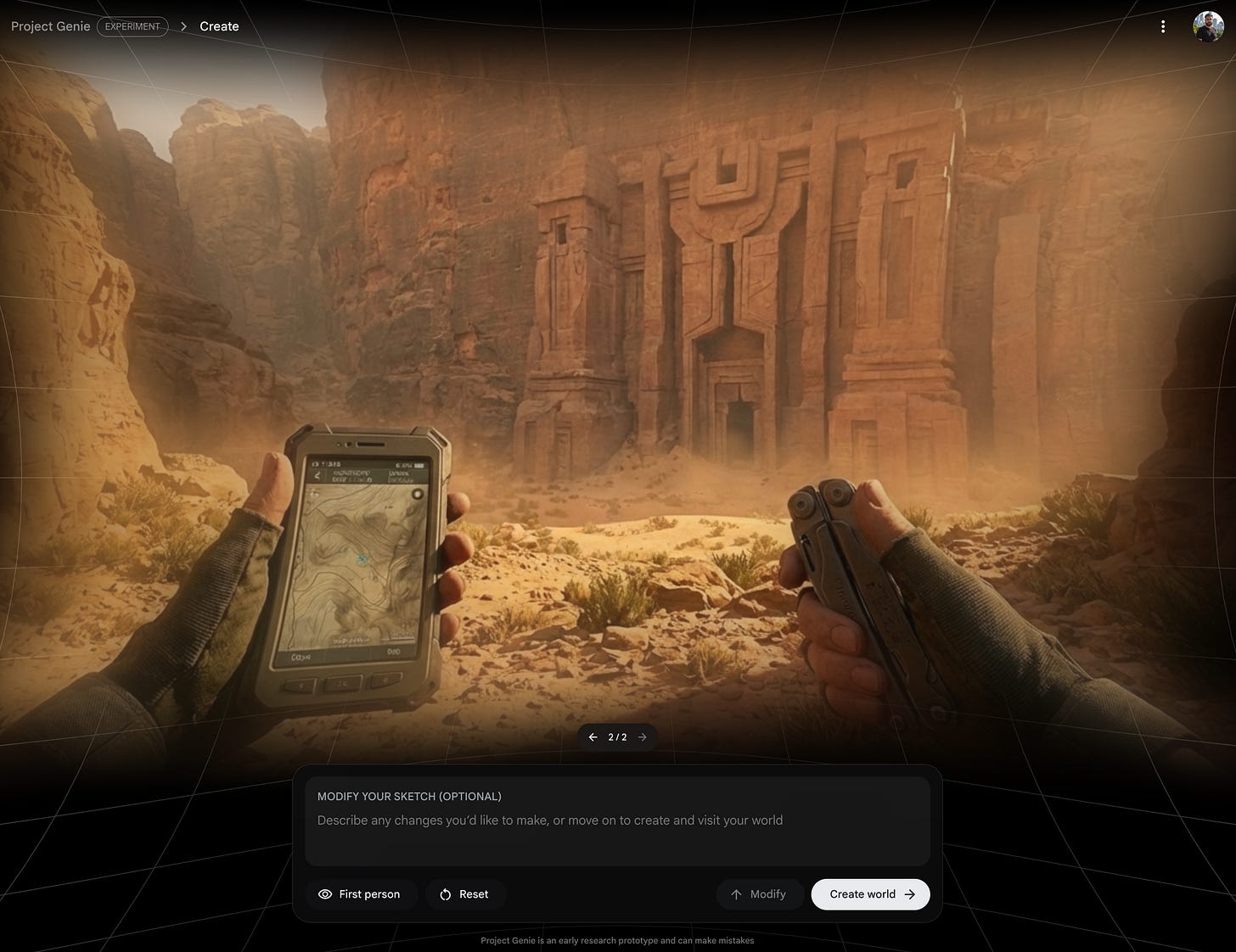

One of the most fascinating aspects of Genie 3 is its emergent behavior. In one generation, I prompted a first-person view holding a GPS device in a desert. As I moved around in the environment, the mini-map on the device synchronized perfectly with my movement—an affordance the model learned simply from its training data, not through explicit programming.

Insights from Google DeepMind

I had the chance to sit down with Jack Parker-Holder (Research Scientist) and Diego Rivas (Product Manager) from the DeepMind team to discuss what this means for the future of creativity.

A few key takeaways from our conversation:

Virtual Production: Creatives can use Genie as a tool for rapid ideation—essentially teleporting onto a virtual set to test camera angles and lighting before a single frame is shot.

Prototyping Worlds: While it isn’t a full game engine, it allows developers to iterate on world concepts at a speed that was previously impossible.

Shattering the 60-Second Barrier: While generations are currently capped at one minute, the team is already looking at several options to extend beyond that, and it’s not a fundamental barrier to overcome.

Early Access is Live

If you are a Google Ultra user in the US, early access is live today, January 29th. You can jump into Project Genie right now and start building.

For those looking to dive deeper into the technical mechanics and creative implications of this tech, I highly recommend checking out these previous deep dives on the channel:

Is Google’s Genie 3 About to Replace Game Engines?: My original overview of Genie 3.

World Models Will Break the Internet: A foundational look at why world models are the next frontier beyond LLMs.

Many thanks to the Google DeepMind team for the early access. We’re at the very beginning of this journey into playable reality. I’m excited to see what worlds you all decide to build.

If this gave you something to think about, share it with fellow reality mappers. The future’s too interesting to navigate alone.

Cheers,

Bilawal Sidhu

The world isnt ready for world models!